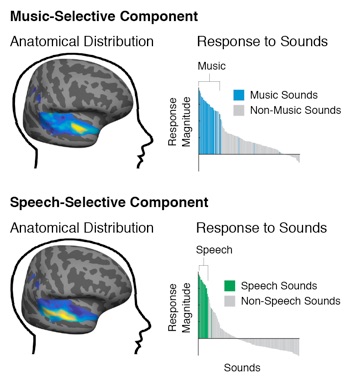

Our study introduces a novel approach (‘voxel decomposition’) for inferring neural populations from fMRI responses to a large stimulus set. The results of our study suggest that human auditory cortex contains distinct neural populations selective for music and speech, respectively.

This work was recently featured in a couple of NYT articles, by Natalie Angier:

New Ways Into the Brain’s ‘Music Room’

Lending Her Ears to an MIT Experiment

As well as the MIT News:

For more information:

Sam Norman-Haignere’s website.

Relative to other sensory areas, relatively little is known about the functional organization of human auditory cortex. This study introduces a method for inferring sensory functional organization from fMRI responses to a large set of natural sounds, without needing to explicitly enumerate a set of specific hypotheses. Each fMRI voxel is modeled as a weighted sum of a small number of canonical response profiles to the sound set (‘components’), each potentially reflecting a different neuronal subpopulation with auditory cortex. The method then searches the large space of possible responses for those that best explain the voxels, given statistical criteria. We found that six response profiles (six components) were sufficient to explain more than 80% of the voxel response variation to natural sounds in auditory cortex (across more than 10,000 voxels from 10 human subjects). Moreover, the response profiles inferred by our analysis had interpretable functional properties and stereotyped anatomical weights across voxels, even though no functional or anatomical criteria contributed to their discovery. Four components reflected selectivity for particular acoustic dimensions (e.g. frequency, pitch, spectrotemporal modulation), and explained responses in different subregions in and around primary auditory cortex. Two other components were strikingly selective for speech and music, respectively and occupied distinct regions of non-primary auditory cortex. The finding of a putative neural population selective for music, is particularly notable, because prior studies (including unpublished work from our own lab) have not revealed clear evidence for music-selectivity (Leaver & Rauschecker, 2010; Angulo-Perkins et al., 2014). We show that music-selectivity is diluted in raw voxels, which are the focus of standard fMRI analyses, plausibly because each voxel reflects the pooled response of many thousands of neurons. Voxel decomposition was thus critical to detecting music-selectivity by overcoming the coarse spatial resolution of standard fMRI measurements. Our findings suggest that representations of music and speech diverge in non-primary pathways of the human brain.