In everyday listening, sound reaches our ears directly from a source as well as indirectly via reflections known as reverberation. Reverberation profoundly distorts the sound from a source, yet humans can both identify sound sources and distinguish environments from the resulting sound, via mechanisms that remain unclear. The core computational challenge is that the acoustic signatures of the source and environment are combined in a single signal received by the ear. We investigate the hypothesis that the human auditory system utilizes the natural statistics of environmental reverberation to separately infer sound and space from a reverberant signal.

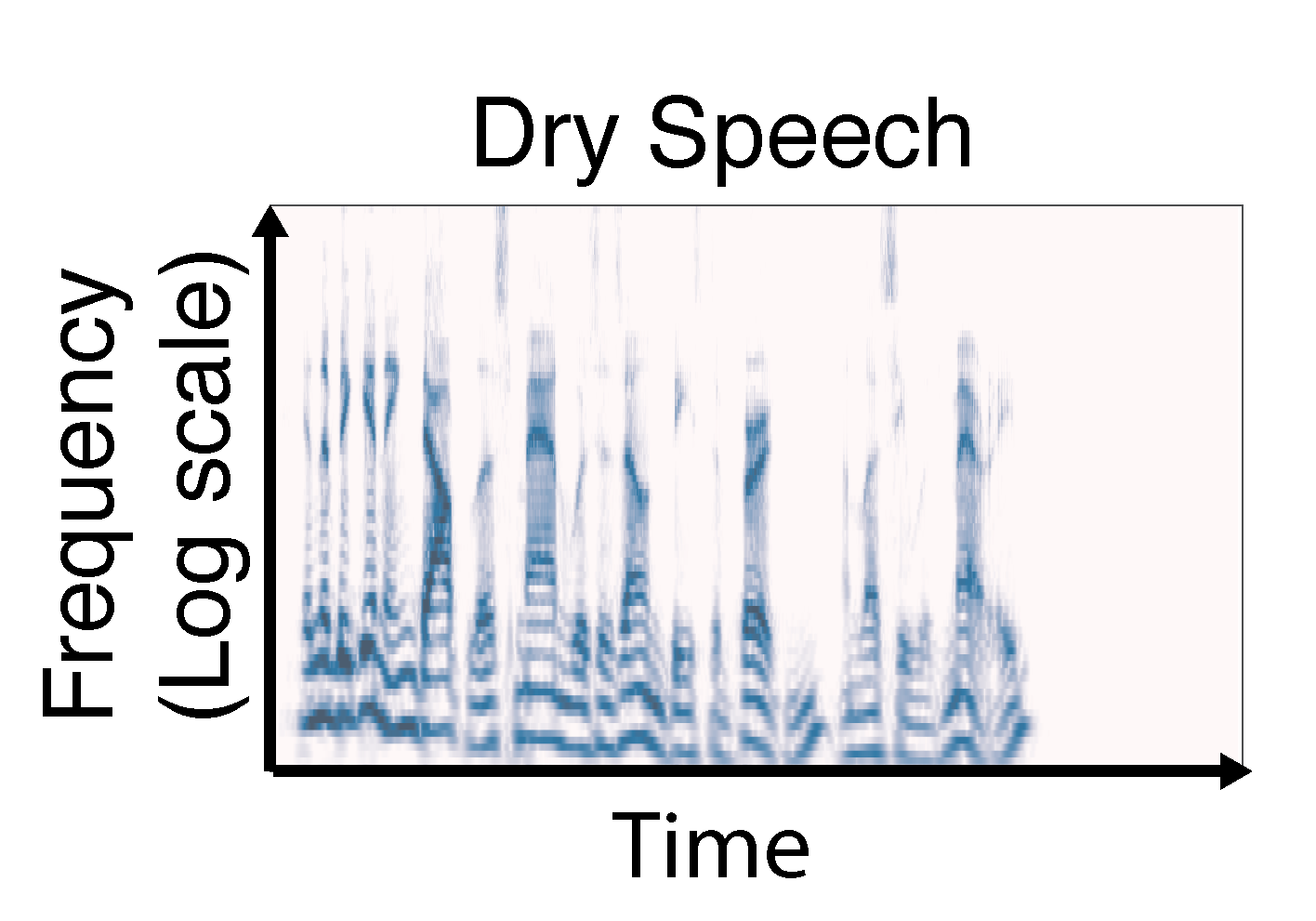

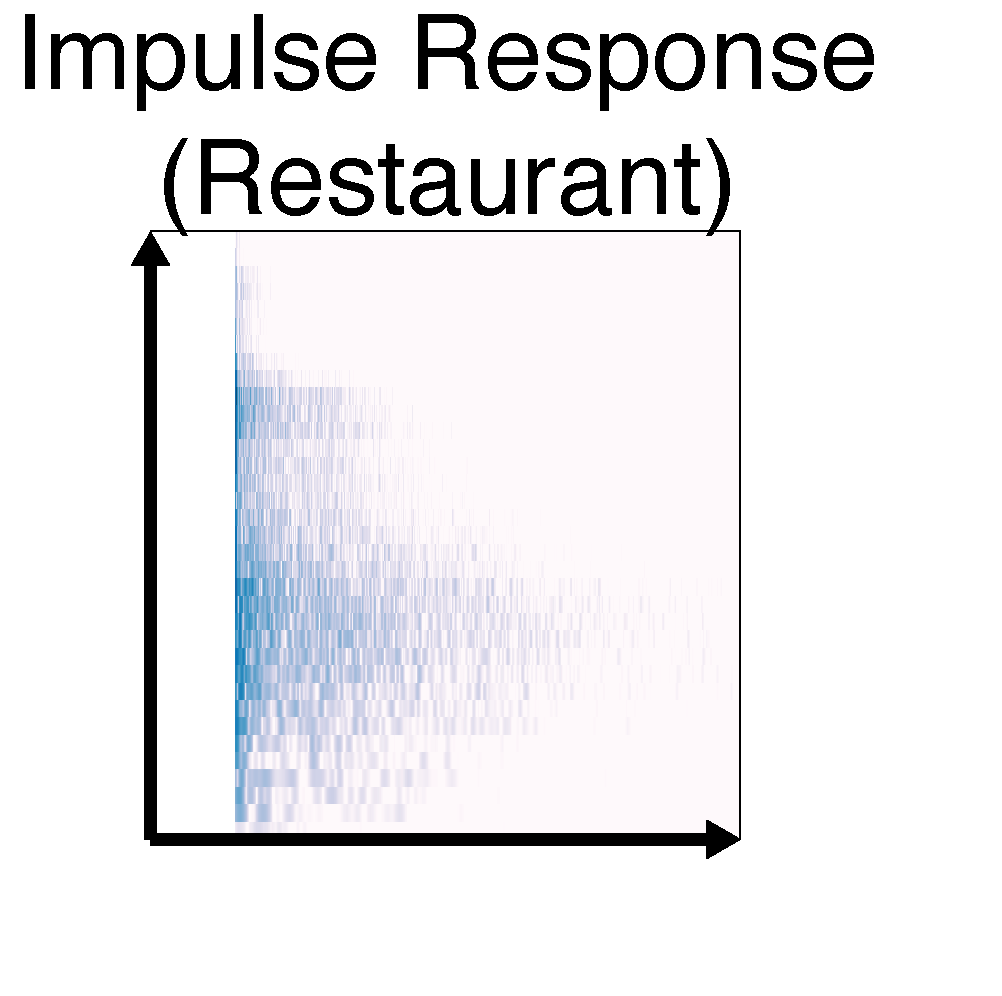

Reverberation can be modelled as a linear filtering of a clean sound source (dry speech) convolved with an impulse response (i.e., the response of the space to an impulse source). (Click the cochleagrams for audio)

Measuring natural statistics of reverberation

We recruited 7 volunteers and sent them randomly timed text messages 24 times a day for two weeks. Participants responded to each text with the address and a photograph of their location. We then attempted to visit each location and measure the IR. IRs were measured with an apparatus that recorded a long-duration, low-volume noise signal produced by a speaker. Because the noise signal and the apparatus transfer function were known, the IR could be inferred from the recording. The long duration allowed background noise to be averaged out, and, along with the low volume, permitted IR measurements in public places. We measured IRs from 271 distinct survey sites.

The measurement apparatus in a range of survey locations (from left): Gym, supermarket, forest, restaurant, department store. (See IR Survey for audio from the survey.)

Analyzing reverberant impulse responses (IRs)

To examine the effect of the IR on information in peripheral auditory representations, we represented IRs as "cochleagrams" intended to capture the representation sent to the brain by the auditory nerve, obtained by processing sound waveforms with a filter bank that mimicked the frequency selectivity of the cochlea and extracting the amplitude envelope from each filter. Despite the diversity of spaces (including elevators, forests, bathrooms, subway stations, stairwells, and street corners), the IRs showed several consistent features when viewed in this way:

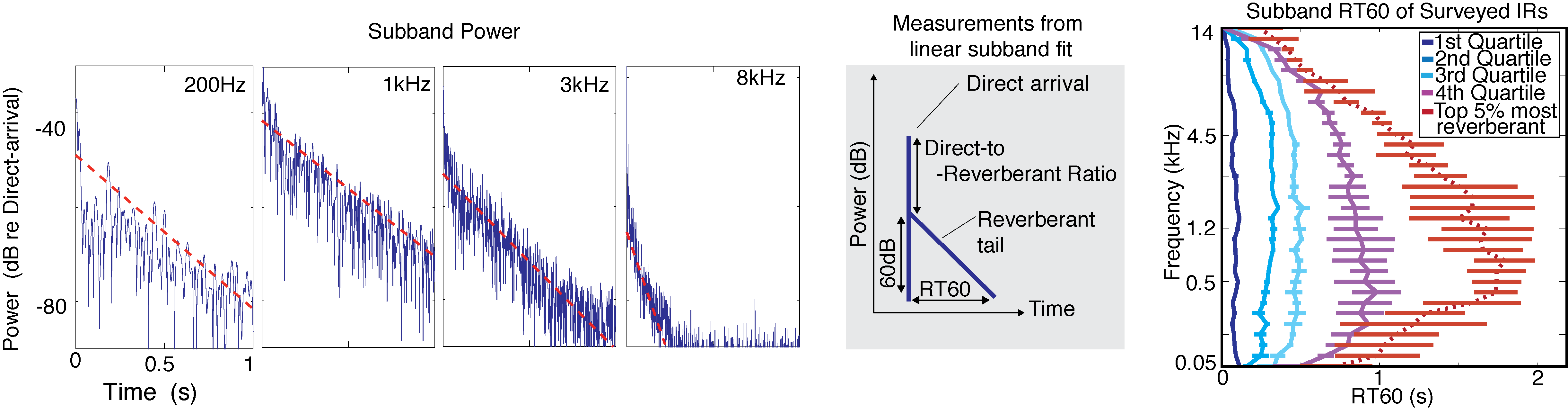

- Within ~50ms after the first arrival of sound, IRs exhibit Gaussian statistics

- Reverberant energy decays exponentially

- Mid-frequencies (200-2000Hz) decay more slowly than higher or lower frequencies

- The frequency variation of decay rate becomes more pronounced as the overall amount of reverberant energy increases

From left: Power decay with time in four cochlear subbands. In each subband an exponential decay (i.e a straight line when power is expressed in dB) is fit to the data by least-mean-squares (red dashed line). The Reverberation Time (RT60) is extracted from the fitted decay rates and plotted for the surveyed data blocked into quartiles according to the magnitude of reverberation. (See Figures 3 and 4 for more details.)

Synthesizing IRs

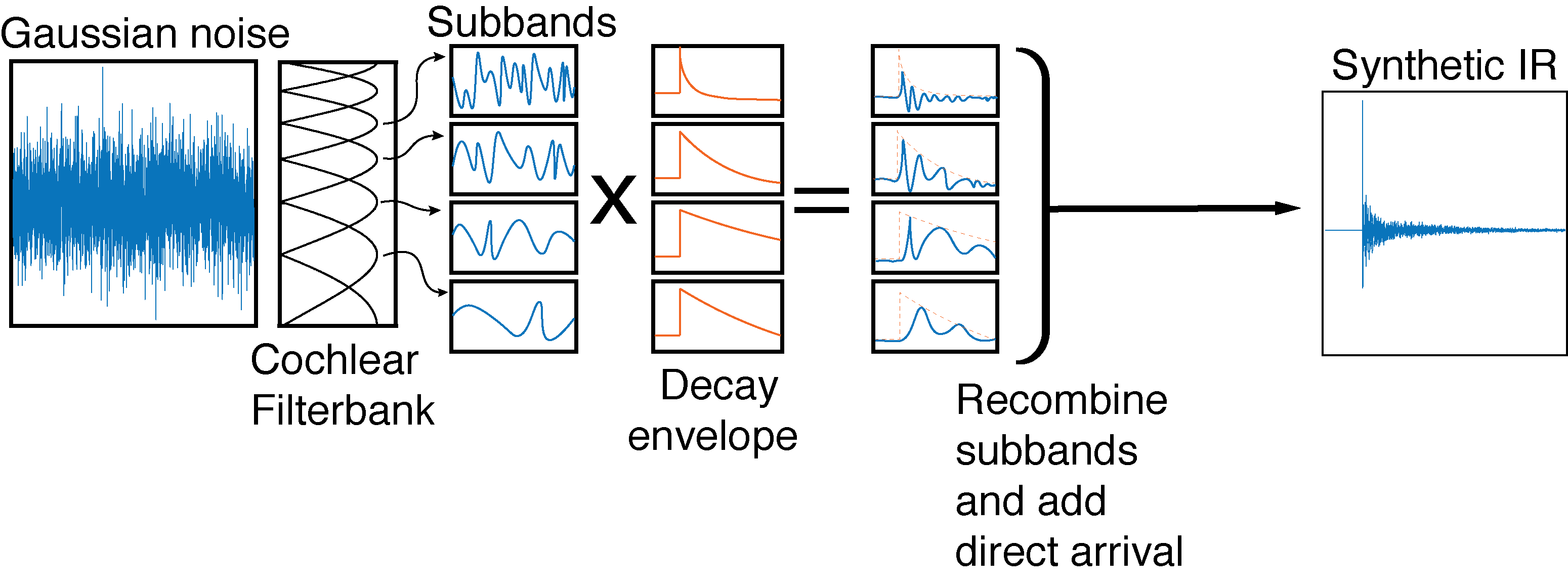

We tested whether human listeners were sensitive to the regularities we observed in real-world IRs, by synthesizing IRs that were consistent or inconsistent with these regularities. We synthesized IRs by imposing different types of energy decay on noise filtered into simulated cochlear frequency channels.

Schematic of IR synthesis. (See Audio demos to hear synthetic IRs.)

Perception of synthetic IRs

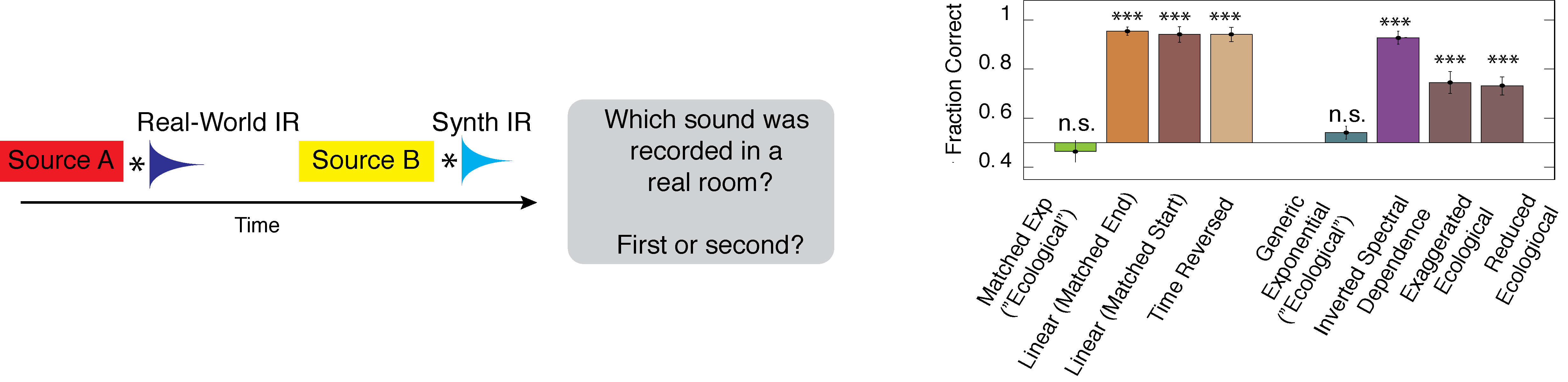

To assess whether the synthetic IRs replicated the perceptual qualities of real-world reverberation, we asked listeners to discriminate between real and synthetic reverberation. Participants were presented with two sounds and asked to identify which one was recorded in a real space. Performance was poor unless the synthetic IRs replicated the natural statistics that we observed, suggesting that these regularities capture the perceptually important effects of reverberation.

Task schematic (left) and results (right) for the reverberation realism experiment. The "ecological" IRs were synthetics which conformed to the surveyed distribution. All the others violated the distribution in some way. (See Figure 6 for more details.)

Perceptual separation of source and IR

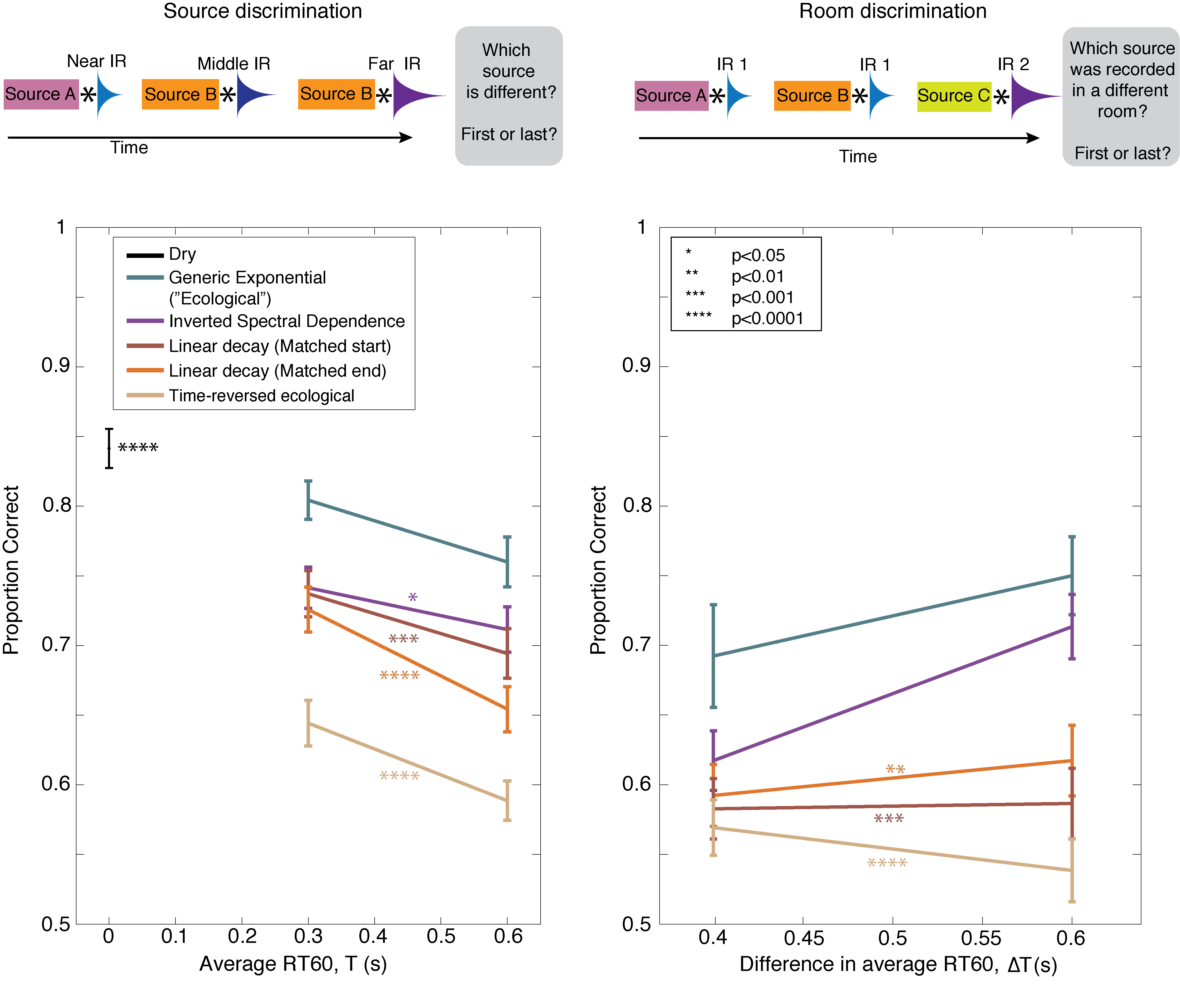

We tested whether humans can separately estimate source and filter from reverberant sound, and whether any such abilities would depend on conformity to the regularities present in real-world reverberation. Participants heard synthetic sources convolved with synthetic IRs. One task measured discrimination of the sources ("Which sound is different?"), while another measured discrimination of the IRs ("Which sound was recorded in a different room?"). In both cases the sources were designed to be structured but unfamiliar, and the various types of synthetic IRs were equated for the distortion that they induced to the cochleagram, to minimize the chances that performance might simply reflect differences in such distortion. Participants were better at both tasks when the IRs exhibited natural reverb statistics, suggesting that the ability to separate the effects of source and filter leverages prior knowledge of natural reverberation.

Task schematic and results for the source perception separation experiments: Source discrimination (left) in the presence of varying reverberation and IR discrimination (right) in the presence of varied sources. (See Figure 7 for more details.)